Timepiece

A generative, sound-responsive installation using Sunderland’s archives

Artist: Peter McDada

How Timepiece Works

Key Features

Ambient & non-disruptive — designed to blend with its architectural setting

Generative layering — unique, non-repeating compositions

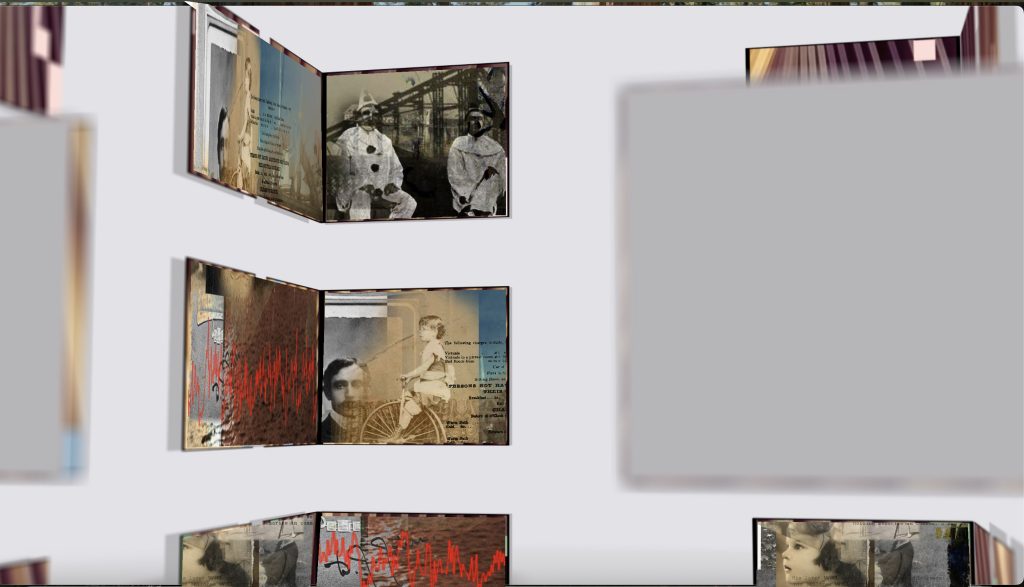

Multi-screen installation — enveloping audiences in a continuous flow

Temporal responsiveness — rhythms shift on the hour, subtly marking time

Audio collages — combining archival voices, sounds, and atmospheres

Unlike a film or looped video, Timepiece is a generative system that never plays the same way twice.

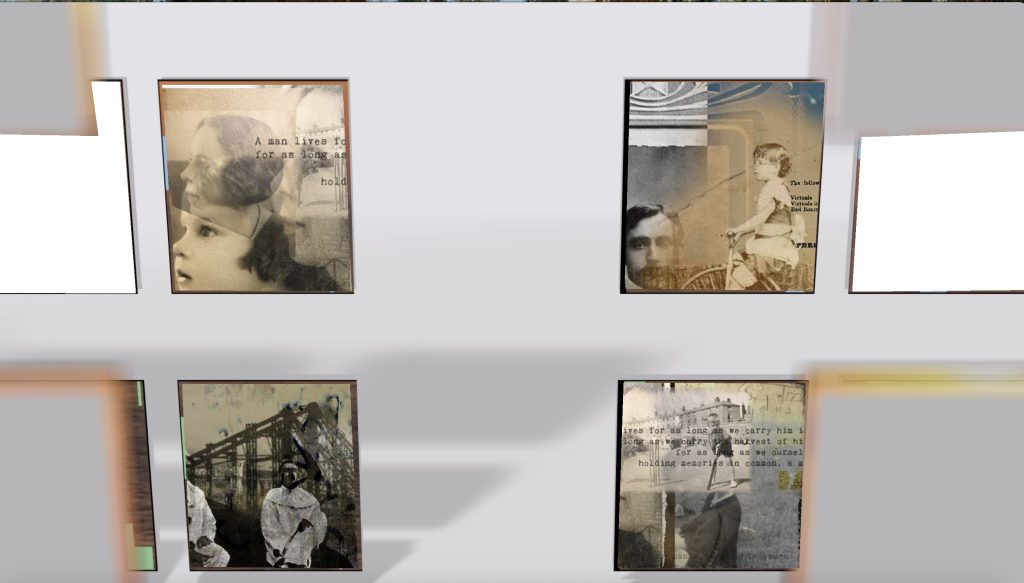

- Layered Imagery:

Archival images are layered like translucent sheets of memory. At any given moment, up to five images might overlap — some fully visible, others faint ghosts beneath the surface. - Randomised Timings:

Each image is assigned a random duration and opacity, meaning no one can predict when or how fragments will appear. Two visitors standing side by side might witness entirely different juxtapositions. - Always Changing:

There is no fixed start or end point. The work runs continuously, recombining itself across days, weeks, and months. The Sunderland archive is never shown the same way twice. - Sound Integration:

Audio responds dynamically, with layered voices, ambient textures, and subtle rhythms echoing the visual structure. At hourly intervals, sound and image swell together before returning to a slower drift.

Core Artistic Approach

Visuals:

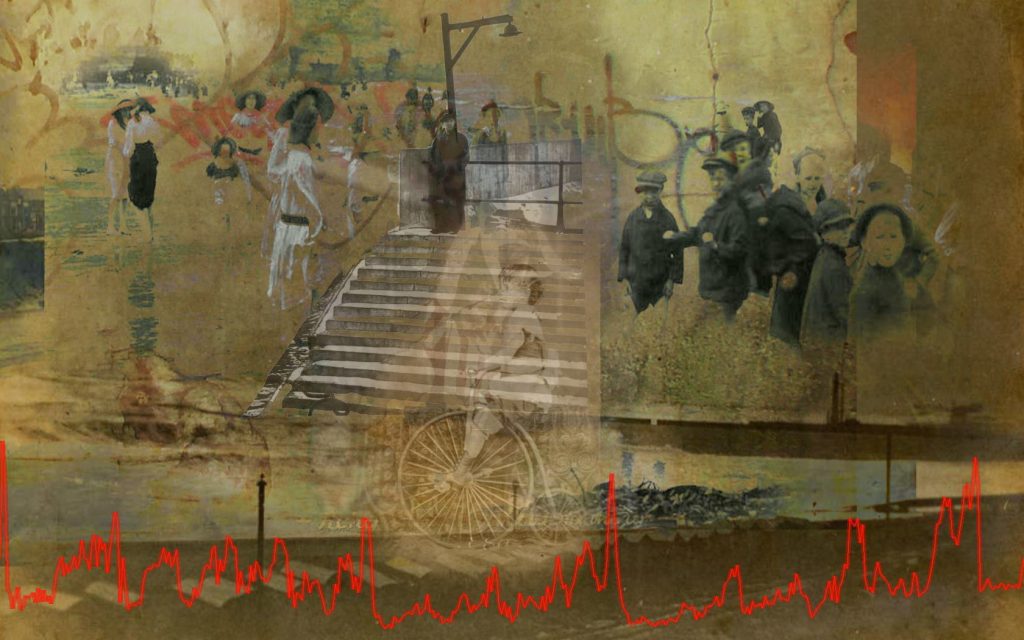

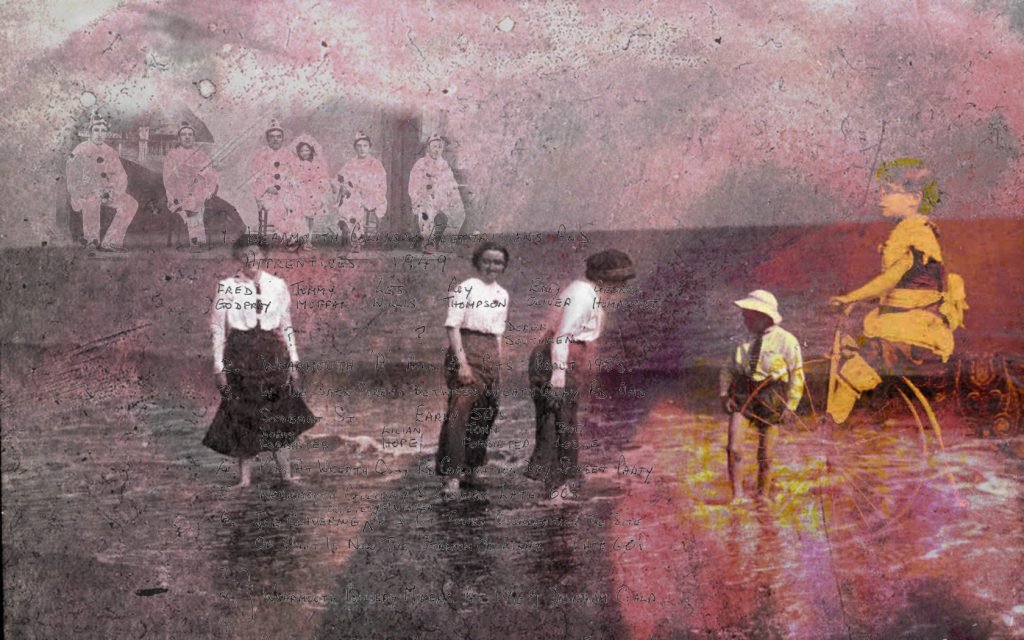

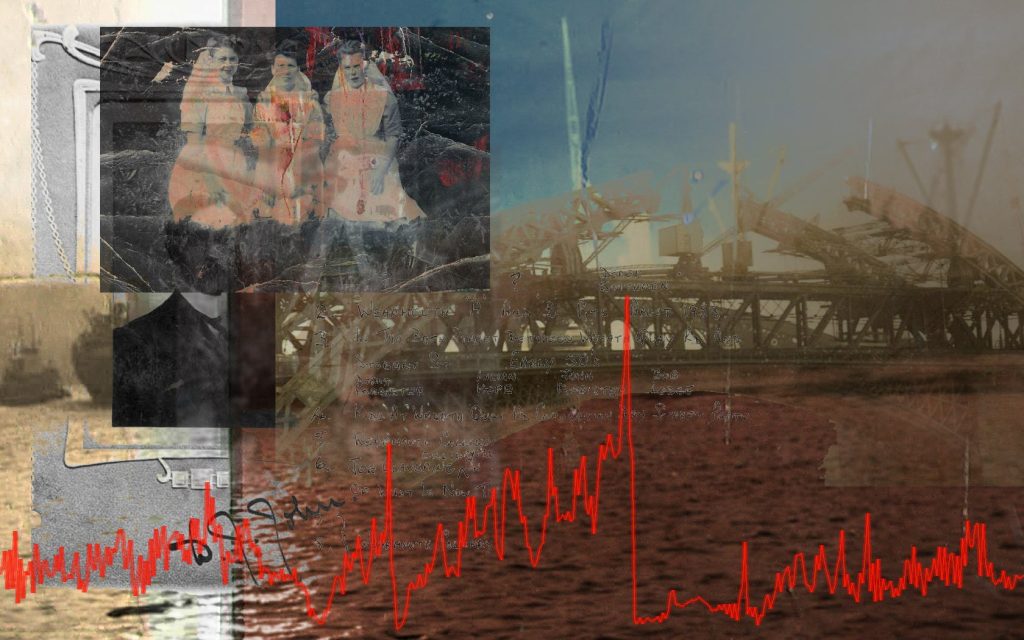

- The video canvas becomes a living archive—a multi-layered visual tapestry.

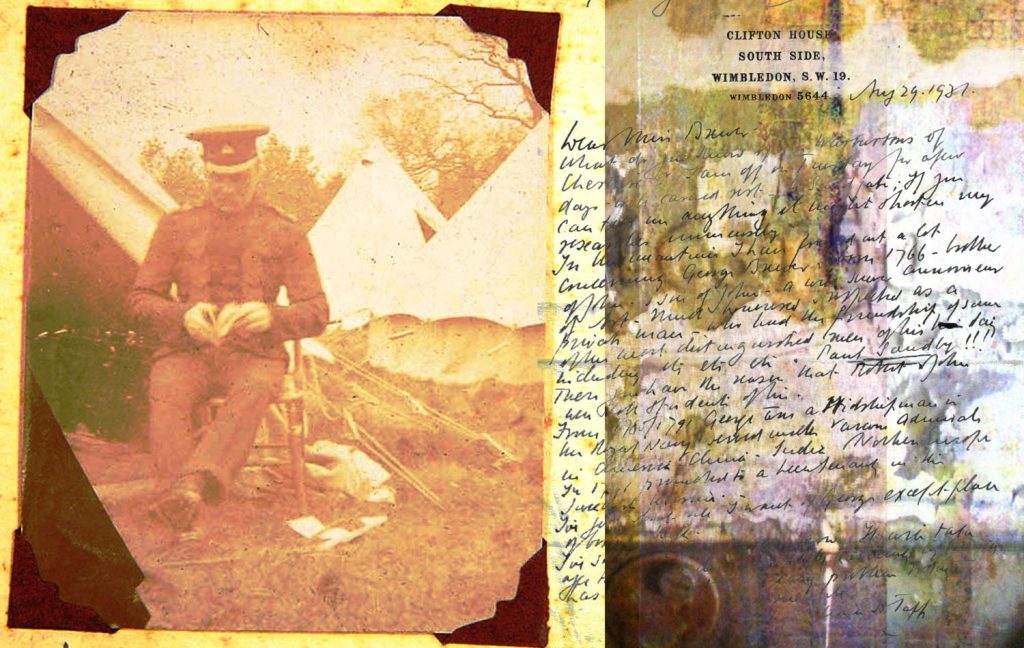

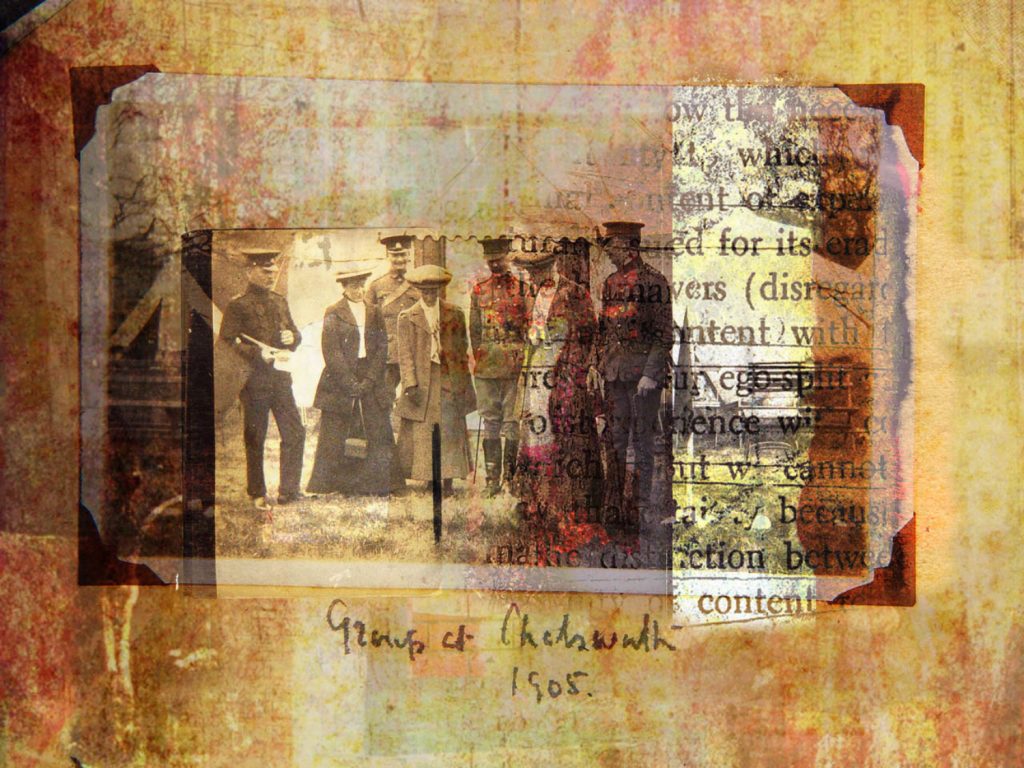

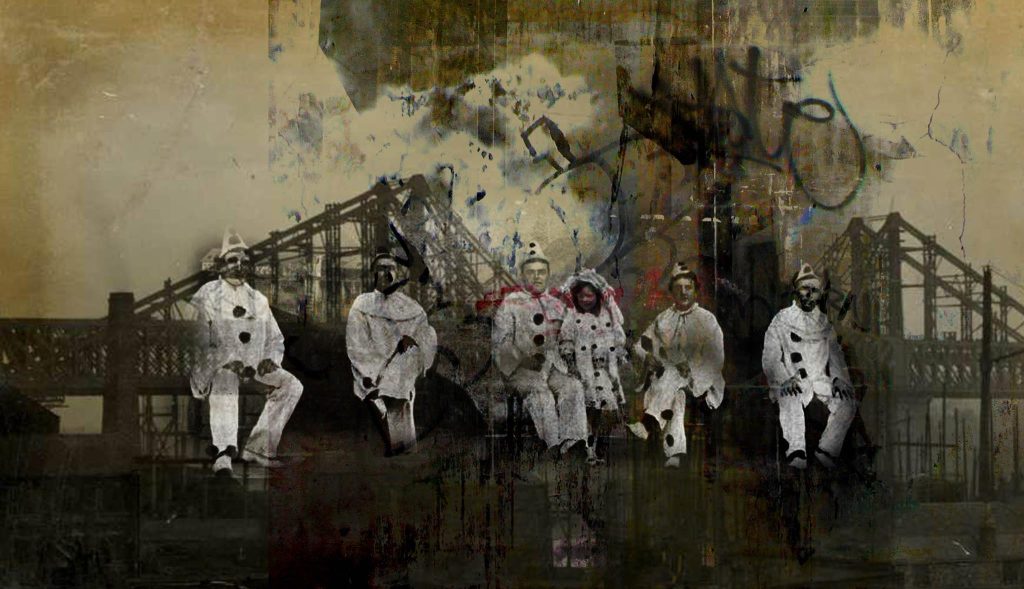

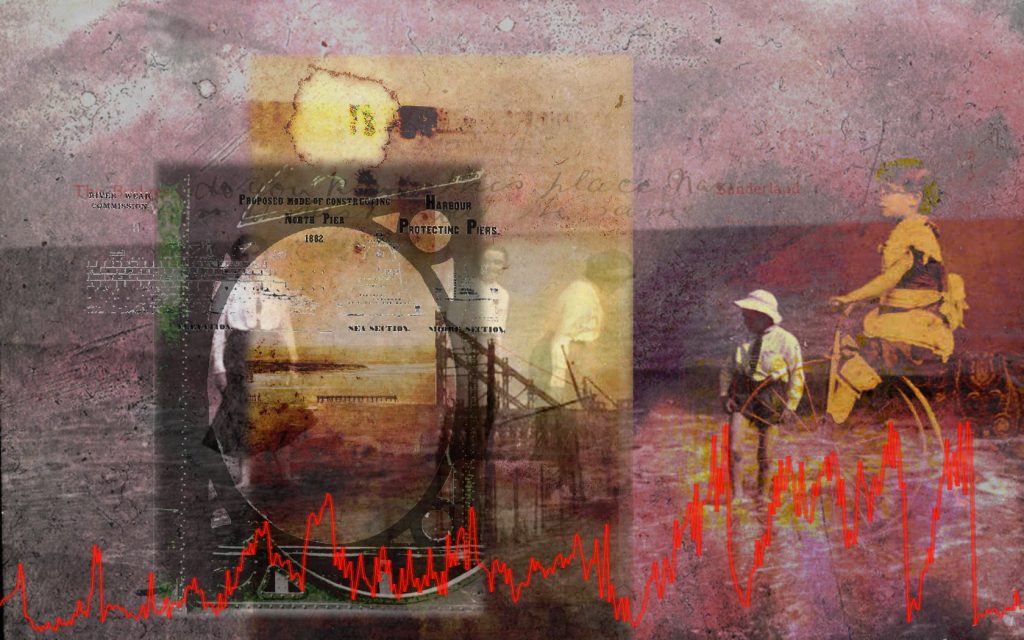

- Archival materials from Sunderland’s history (photos, film, textures like paper, fabric, stone) are combined with images from community members (e.g. submitted family photos, textures of homes, old shop signs, clocks, wool, industrial artefacts).

- Using a revived and adapted iCoda engine, images fade, shift, bleed, or imprint on one another throughout the day—simulating your original inspiration (flood-damaged photos + palimpsest effect).

- The screen becomes a kind of living wall or “clockface” where each hour reveals different textures, associations, and moods.

- The visual language can be rooted in Sunderland’s coastal light, industrial remnants, and domestic memory, pulling threads across history.

Audio:

- Sound will follow a spatial choreography—surround sound across the atrium, subtly directional, responsive to time.

- You can incorporate:

- Archival recordings (bells, train sounds, ships, markets, factories)

- Field recordings (contemporary Sunderland ambience: sea wind, children playing, street noise)

- Musical textures, slowly evolving tonal layers, perhaps using modular synthesis or tape loop aesthetics (repetition with decay)

- Reconstructed sounds of clocks or bells (can be literal or abstracted)

- Oral histories / voices, softly embedded (e.g. clockmakers, seamstresses, weavers, teachers)—emphasizing Sunderland’s labour history

- Every hour, day, and month has its own sonic theme, maintaining consistency with subtle variation. For instance:

- Morning: light, breathy, high tones

- Noon: layered machinery rhythm or church bell motifs

- Evening: tape hiss, distant voices, low drones

- Seasonal shifts reflected in timbre and tempo, e.g. summer = warm reverbs, winter = icy grain textures

Metaphors: Palimpsest + Tapestry

- You’ve already developed a dual conceptual framework:

- Palimpsest: Image erosion, memory transfer, time smudging one layer into another.

- Tapestry: Threads of community memory and history—interwoven visual/audio motifs, stitched together across generations.

- These metaphors help anchor the audience—especially important for public engagement and PR.

Examples of past work using the Palimpsest Technique

Technical Implementation

- Revamp your iCoda system to run on the high-performance media server (either with assistance or rebuilt in TouchDesigner, Max/MSP, or Unreal Engine).

- Modular structure: You deliver 12 core video/audio modules (1 per month), with layered logic to evolve within each module.

Public Engagement & Legacy

- We could offer community image/sound collection workshops to feed the archive.

- Provide an interactive kiosk or QR trail explaining the metaphors, time cycles, or “what you’re hearing now.”

- PR framing: “A Sunderland time tapestry made from memory, sound and light.”

Key Components

🎞️ Desktop Version

- contentimages/ – reactive image assets triggered by sound

- mainImages/ – slow background image slideshow

- contentvideos/ – short moving image loops (used similarly to content images)

- screenshots/ – folder for user-generated screen captures

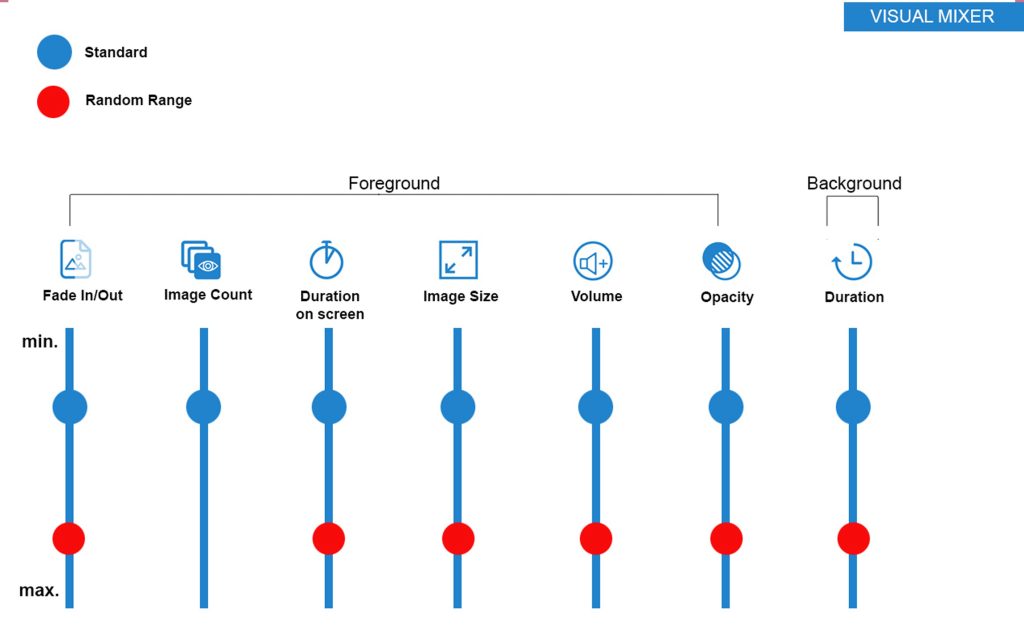

- mixer controls (inside app GUI) – determine:

- Duration of background and content image display

- Number of simultaneous content images on screen

- Overall visual tempo based on sound input

- verdana.ttf – UI font (possibly for watermark/text overlays)

📱 Unity Version (Mobile)

- Standard Unity folders present:

Assets/,Packages/,ProjectSettings/ - Includes

androidkey,icodd.xml, and platform-specific subfolders (ios/)

How iCoda Works

- Users can control “mixer” settings (via UI sliders or inputs) to:

- Adjust the pace of the background slideshow

- Define how reactive content images are (number on screen, decay rate)

- The final composition can be screen captured to preserve unique visual moments—each a product of chance, sound, and time.

Current Issues

- Desktop version is functional, but platform-dependent (

.appsuggests it’s Mac-only).. - Code refactor or rebuild is required to ensure cross-platform support and long-term maintainability.

- Sound/image layering logic can be rebuilt in:

- TouchDesigner (ideal for real-time visuals/audio)

- Max/MSP with Jitter

- Unity with FMOD/Wwise

- Or coded from scratch in Processing or openFrameworks

This can be rectified with the current budget.